OLED TV technology has captured the imagination of video enthusiasts over the past few years. Just like plasma display panel (PDP) technology, OLED is self-emissive, meaning that every pixel can be turned on and off individually, contributing to the highly desirable picture quality attributes of absolute blacks and infinite contrast. At the CES 2017 consumer electronics trade show that’s going to take place in Las Vegas next month, more TV brands will likely join LG Electronics in unveiling their shiny new OLED televisions (with panels sourced from LG Display, the lone vendor of commercially viable TV-sized OLED screens).

As outstanding as OLED technology is, there lie a few challenges ahead, especially as the film and broadcast industry marches relentlessly towards HDR (high dynamic range) delivery. We’ve reviewed and calibrated almost every OLED television on the market throughout 2016, and while OLED reigns supreme in displaying SDR (standard dynamic range) material thanks to true blacks, vibrant colours and wide viewing angles (almost no loss of contrast and saturation off-axis), there have been some kinks with HDR.

Like it or not, peak brightness is a crucial component that’s central to the entire HDR experience. The ST.2084 PQ (perceptual quantisation) EOTF (electro-optical transfer function) HDR10 standard and Dolby’s proprietary Dolby Vision format go all the way up to 10,000 nits as far as peak luminance is concerned, although in the real world, Ultra HD Blu-rays are mastered to either 1,000 (on Sony BVM-X300 reference broadcast OLED monitors) or 4,000 nits (on Dolby Pulsar monitors).

Once calibrated to D65 (yes, HDR shares the same white point as SDR), none of the 2016 OLEDs we’ve tested in our lab or calibrated for owners in the wild could exceed 750 nits in peak brightness when measured on a 10% window (the smallest window size stipulated by the UHD Alliance for Ultra HD Premium certification). Due to the effects of ABL (Automatic Brightness Limiter) circuitry, most 2016 OLED TVs can only deliver a light output of around 120 nits when asked to display a full-field white raster, even though we’ll be the first to admit that such high APL (Average Picture Level) is extremely rare in real-life material.

This is the point where some misguided OLED owners will put forth the argument that “I don’t need more brightness; 300/ 400/ 500 nits already nearly blinded me” or “1000 nits? I don’t want to wear sunglasses when I’m watching TV!”. To clarify, this extra capacity of peak brightness is applied not to the whole picture, but only to selective parts of the HDR image – specifically specular highlights – so that reflections off a shiny surface can look more realistic, creating a greater sense of depth and insight. The higher the peak brightness, the more bright highlight details that can be resolved and reproduced with clarity, and the more accurate the displayed image is relative to the original creative intent.

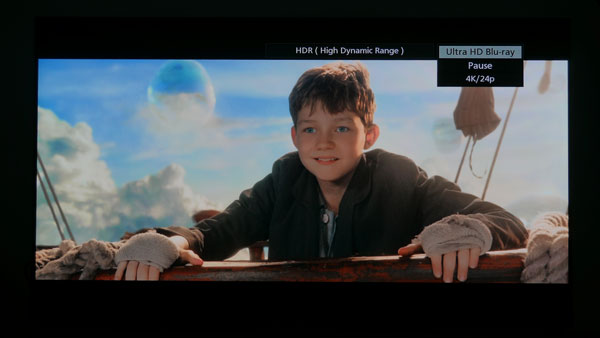

Without proper tone-mapping (we’ll explain this later), bright detail whose luminance value exceeds the native peak brightness of the HDR TV will simply be discarded and not displayed (technically this is known as “clipping”). Here’s an example from the 4K Blu-ray of Pan (which is mastered to 4000 nits) as presented on a 650-nit display with minimal tone-mapping:

Notice how the details in the clouds on the right of the screen, not to mention the outline of the bubble on the left were blown out, since the display was unable to resolve these highlight details. Here’s how the image should look with more correct tone-mapping:

As you can see, if tone-mapping is taken out of the equation, a ST.2084-compatible display’s peak brightness directly affects how much bright detail is present in the picture, rather than merely how intense the bright detail is. If you’ve seen and disliked crushed shadow detail on any television, clipped highlight detail is a very real problem at the other end of the contrast ratio spectrum too.

We’ve used the term tone-mapping several times in the preceding paragraphs, so we’d better explain what it is. In HDR phraseology, tone-mapping has been used generically to describe the process of adjusting the tonal range of HDR content to be fitted on a TV whose peak brightness and colour gamut coverage are lower than the mastering display.

Since there’s currently no standard for tone-mapping, each TV manufacturer is free to use its own approach. With static HDR metadata (as implemented on Ultra HD Blu-rays as of Dec 2016), as a general rule of thumb a choice has to be made between the brightness of the picture (i.e. Average Picture Level or APL) and the amount of highlight detail retained. Assuming a HDR TV’s peak brightness is lower than those of the mastering monitor, attempting to display more highlight detail will decrease the APL and make the overall image dimmer.

Dynamic metadata with scene-by-scene optimisation should prove more effective in maintaining the creative intent, but here’s the bombshell: the higher a display’s peak brightness, the less the tone-mapping it has to do. We’ve analysed many different tone-mapping implementations on various televisions we’ve reviewed throughout 2016, and they invariably introduced some issues, ranging from an overly dark/ bright picture to visible posterisation at certain luminance levels.

Besides determining the amount of highlight detail displayed from source to screen sans tone-mapping, peak brightness also has a significant impact on colour reproduction, more so in HDR than in SDR.

“But wait,” we hear you ask. “All UHD Premium-certified TVs should cover at least 90% of the DCI-P3 colour space anyway. Why should their colours be affected by peak brightness in any noticeable manner?”

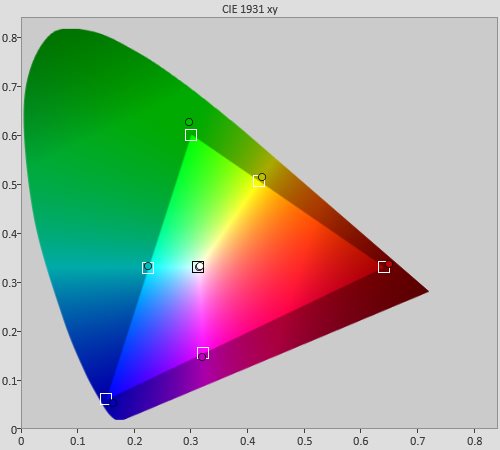

And therein lies the problem. For the longest time, colour performance in consumer displays has been represented using CIE chromaticity diagram which is basically a 2D chart, with the 1931 xy version (adopted by many technical publications including ourselves) generally used to depict hue and saturation but not the third – and perhaps most important – parameter of colour brightness.

|

| A typical CIE 1931 xy chromaticity diagram |

In other words, colour palette in imaging systems is actually three-dimensional (hence “colour volume”), but we’ve put up with 2D chromaticity diagrams for as long as we have precisely because of the nominal 100-nit peak white of SDR (standard dynamic range) mastering – taking measurements at one single level of 75% intensity (or 75 nits) was enough to give a reasonably good account of the colour capabilities of SDR displays.

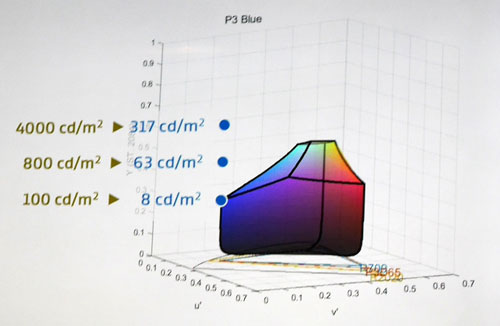

With HDR demanding peak brightness of 1000 or even 4000 nits, plotting 2D colour gamuts – essentially capturing only one horizontal snapshot slice from a vertical cylinder of colour volume – is clearly no longer sufficient. Consider a DCI-P3 blue pixel: on a 100-nit display, the brightest the pixel would get is 8 nits; whereas on a 4000-nit display it would be a very bright yet still fully saturated 317 nits! The blue pixel would look very different on a 100-nit display versus a 4000-nit display, even though it would occupy the same coordinates on a 2D CIE chart.

|

| Colour volume chart showing P3 blue primary at different nit levels (Credit: Dolby) |

Assuming the same 2D colour gamut coverage, it follows then that the higher the peak brightness, the greater the colour volume, and the more colours that are available in the palette for faithful reproduction of the creative intent. As an example, when we reviewed the Sony ZD9 which was capable of delivering 1800 nits of peak luminance, we ran a side-by-side comparison against a 2016 LG OLED whose peak brightness topped out at 630 nits. In the Time Square fight sequence in The Amazing Spider-Man 2 (a 4K BD which was mastered to 4000 nits), the Sony ZD9 managed to paint Electro’s electricity bolts (timecode 00:53:29) in a convincing blue hue, whilst they were whitening out on the LG OLED.

Can better tone-mapping compensate for reduced colour volume caused by lower peak brightness? The true answer is we don’t know: none of the 2016 OLEDs exhibited flawless tone-mapping (although LG did improve it as the year worn on), and there’s virtually no Dolby Vision content (which promises superior tone-mapping through dynamic metadata) in the UK beyond some USB stick demos at this time of publication, so we’ll have to wait until next year when more non-LG OLED TVs and Dolby Vision material hit the market before reaching any definitive conclusion. One thing’s for certain though – rendering colours that are both bright and saturated would be much easier with a high brightness reserve.

For ages, videophiles all around the world have been pining for the success of OLED TV: with the promise of true blacks, vibrant colours, wide viewing angles and ultra-thin form factor, what’s not to like? And once LG Display managed to improve the yields of its WRGB OLED panels to commercially viable levels, OLED had looked set to become the future of television.

However, following the arrival and proliferation of HDR this year, things have suddenly become less clear-cut. In our annual TV shootout back in July, the Samsung KS9500 received the most votes from the audience for HDR presentation, followed closely by Panasonic DX900 ahead of the LG E6 OLED.

Don’t get us wrong: for watching SDR content, there’s nothing we would prefer over the 2016 lineup of LG OLED TVs (except perhaps Panasonic’s final generation of plasmas for their innate motion clarity). But HDR provides an undeniably superior viewing experience to SDR, and while HDR content remains thin on the ground, it’s growing in quantity and maturing in quality.

A film producer recently told HDTVTest, “You don’t know what HDR is supposed to look like until you’ve watched Chappie on a 4000-nit Dolby Pulsar monitor”. We certainly hope 2017 will bring OLED televisions with higher peak brightness, greater colour volume and better tone-mapping to fulfil the creative intent of HDR movies in a more accurate manner.